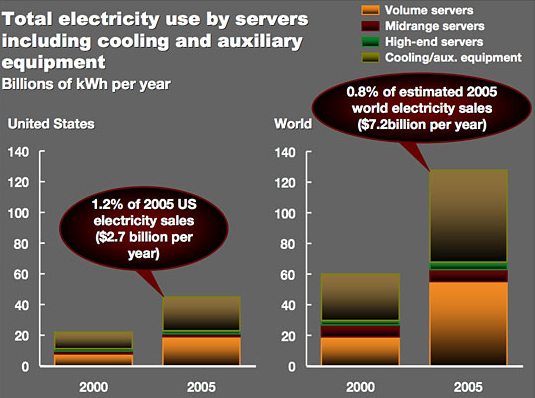

They found out that total power consumption of U.S. servers accounted for 0.6 percent of overall electricity consumption, and when you add the power consumption of the cooling equipment it doubles to 1.2 percent of all electricity used in the U.S. This is the same as the amount of electricity used by TVs.

Between 2000 and 2005, server electricity use grew at a rate of 14 percent each year, meaning that it more than doubled in five years. The 2005 estimate shows that servers and associated equipment burned through 5 million kW of power, which cost US businesses roughly $2.7 billion.

Koomey notes that this represents the output of five 1 GW power plants. Or, to put it another way, it's 25 percent more than the total possible output from the Chernobyl plant, back when it was actually churning out power and not sitting there, radiating the area.

If current trends continue, server electricity usage will jump 40 percent by 2010, driven in part by the rise of cheap blade servers, which increase overall power use faster than larger ones. Koomey notes that virtualization and consolidation of servers will work against this trend, though, and it's difficult to predict what will happen as data centers increasingly standardize on power-efficient chips.