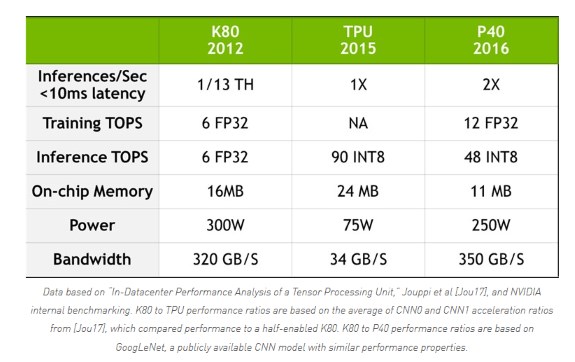

When attacked, NVIDIA isn't the sort of company to sit back, especially as artificial intelligence is becoming the company's new bread and butter. At the company's blog, CEO Jen-Hsun Huang penned down a new article and argues the results look better if you compare the TPU versus the current-generation Pascal-based P40.

Despite the updated comparison, the Google TPU is still almost twice as fast as the Pascal-based Tesla card and it also requires just a quarter of the power. However, one interesting caveat is that the TPU is exclusively aimed at inference, whereas the Tesla card is also designed to be used for training.

While Google and NVIDIA chose different development paths, there were several themes common to both our approaches. Specifically:

AI requires accelerated computing. Accelerators provide the significant data processing demands of deep learning in an era when Moore’s law is slowing. Tensor processing is at the core of delivering performance for deep learning training and inference. Tensor processing is a major new workload enterprises must consider when building modern data centers. Accelerating tensor processing can dramatically reduce the cost of building modern data centers.

The technology world is in the midst of a historic transformation already being referred to as the AI Revolution. The place where its impact is most obvious today is in the hyperscale data centers of Alibaba, Amazon, Baidu, Facebook, Google, IBM, Microsoft, Tencent and others. They need to accelerate AI workloads without having to spend billions of dollars building and powering new data centers with CPU nodes. Without accelerated computing, the scale-out of AI is simply not practical.