Posted on Wednesday, February 14 2018 @ 10:48 CET by Thomas De Maesschalck

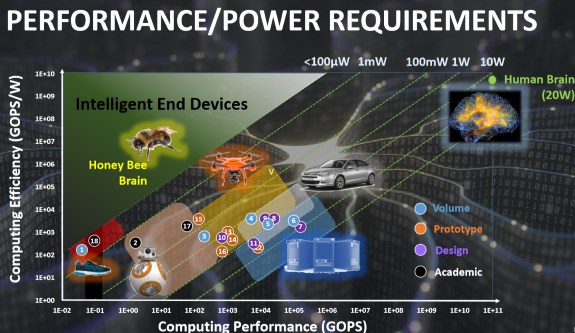

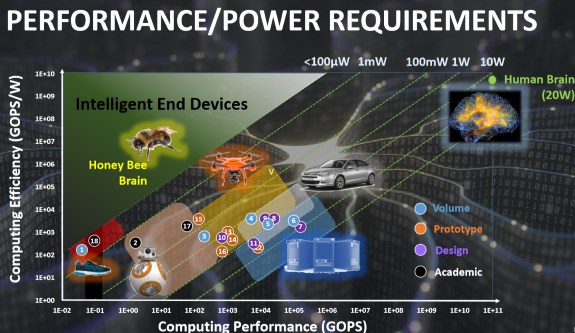

An increasing number of AI researchers are looking at the human brain to figure out new ways to improve computer chips and artificial intelligence. So why take cues from biology? It's pretty simple really, the human brain can process an estimated 10

11 GOPS, while having an estimated power consumption of just 20W. There isn't anything in the electronics world that can match this performance/power ratio. Full details

at EE Times.

This is where the semiconductor industry can take its cue from biology. Traditional computing architecture struggles to meet power requirements, she said, largely because “it needs to consume energy, every time a processor and memory communicate.” By comparison, a brain synapse contains both memory and computing in a single architecture, she explained. This neat trick provides the basis for brain-inspired non-von Neumann computer architecture.

Essential to brain-inspired operating principles are elements like spike coding and spike-timing-dependent plasticity (STDP). Consider the way neuron states are encoded in a system, she noted. In the past, neuron values were encoded using analog or digital values. A recent trend in neuromorphic computing, however, is to encode neuron values as pulses or “spikes,” she explained. “Neurons have no clock and they are event-driven,” she said.