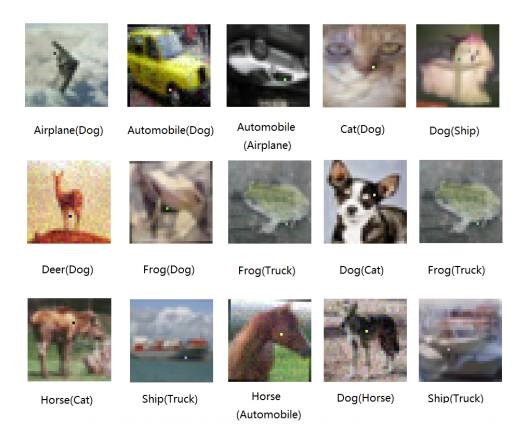

As explained in a paper, the researchers came up with the startling conclusion that a one-pixel attack worked on nearly three-quarters of standard training images.

Not only that, but the boffins didn't need to know anything about the inside of the DDN – as they put it, they only needed its “black box” output of probability labels to function.

The attack was based on a technique called “differential evolution” (DE), an optimisation method which in this case identified the best target for their attack (the paper tested attacks against one, three, and five pixels).

By changing five pixels in a 1024 pixels photo, the researchers achieved a success rate of 87.3 percent. The big caveat here is that they used very small images. On a photo of 280,000 pixels, which is about 530 x 530 pixels, it would require the alteration of 273 pixels, which is still relatively little. Full details at The Register.