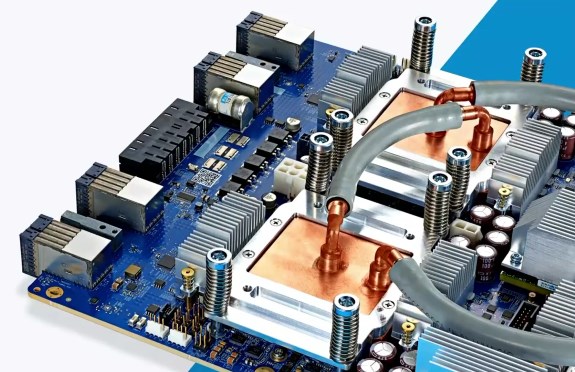

Google started developing its own chips because it needs increasingly more powerful hardware to run its machine learning algorithms. The new model is so powerful that a PCB with four TPU 3.0 chips requires watercooling.

Current pods based on TPU 2.0 feature 64 devices with four dual-core ASICs. Each of these pods has 4TB HBM and offers 11.5 petaflops of machine learning power. Pods based on TPU 3.0 will increase this to a massive 100 petaflops.

Cloud Tensor Processing Units (TPUs ) enable machine learning engineers and researchers to accelerate TensorFlow workloads with Google-designed supercomputers on Google Cloud Platform. This talk will include the latest Cloud TPU performance numbers and survey the many different ways you can use a Cloud TPU today - for image classification, object detection, machine translation, language modeling, sentiment analysis, speech recognition, and more. You'll also get a sneak peak at the road ahead.