In a blog, a Google engineer elaborates that TPU is roughly equivalent to fast-forwarding technology about seven years into the future:

The result is called a Tensor Processing Unit (TPU), a custom ASIC we built specifically for machine learning — and tailored for TensorFlow. We’ve been running TPUs inside our data centers for more than a year, and have found them to deliver an order of magnitude better-optimized performance per watt for machine learning. This is roughly equivalent to fast-forwarding technology about seven years into the future (three generations of Moore’s Law).Not a lot of information is available, but analysts speculate the TPU is focused on inference and may not do well in terms of training:

TPU is tailored to machine learning applications, allowing the chip to be more tolerant of reduced computational precision, which means it requires fewer transistors per operation. Because of this, we can squeeze more operations per second into the silicon, use more sophisticated and powerful machine learning models and apply these models more quickly, so users get more intelligent results more rapidly.

Google claimed the TPUs are three process generations ahead of the competition, said Kevin Krewell, senior analyst with Tirias Research. “The TPUs are “likely optimized for a specific math precision possibly 16-bit floating point or even lower precision integer math,” Krewell said.Work on the project started years ago and the interesting part is that Google has already been using it, this chip was part of the secret sauce that helped Google's AI to beat a human in Go. Besides AI competitions, the chip also has real-world applications, Google claims it's using its TPU to improve its search result relevancy and to enhance the accuracy and quality of its mapping and navigation tools.

“It seems the TPU is focused on the inference part of CNN and not the training side,” Krewell said. “Inference only requires less complex math and it appears Google has optimized that part of the equation.

“On the training side, the requirements include very larger data sets which the TPU may not be optimized for. In this regard, Nvidia's Pascal/P100 may still be an appealing product for Google,” he added.

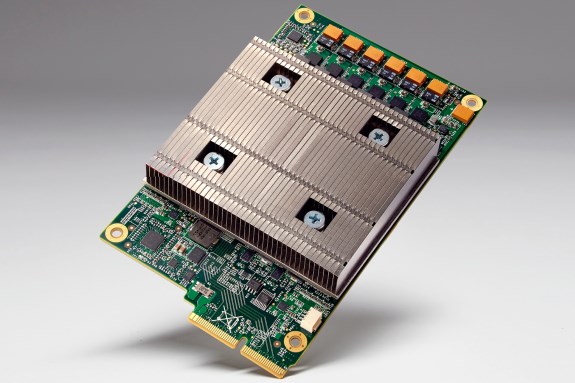

The fun part? You've already seen what TPUs can do. Google has been quietly using them for over a year, and they've handled everything from improving map quality to securing AlphaGo's victory over the human Go champion. The AI could both move faster and predict further ahead thanks to the chip, Google says. You won't get to buy the chip yourself, alas, but you might just notice its impact as AI becomes an ever more important part of Google's services.An example of a TPU card can be viewed below, it fits into the HDD bay of data center racks.