The TPU is a 28nm chip running at 700MHz, it has a 40W TDP and is designed to accelerate the Google TensorFlow algorithm. The TPU has 65,536 8-bit multiply-accumulate units, 24MB cache, a 7ms latency target and it delivers 92 tera-operations per second.

These chips are used in Google's servers to speed up inference jobs for a wide range of services that use machine learning techniques to improve accuracy and performance. For example, Google uses it to improve the accuracy of Image Search, to enhance the accuracy of voice recognition and to deliver better machine translations.

Because the chip was designed a couple of years ago, Google compared it with 2015 hardware like the Intel Haswell server CPU and NVIDIA's K80 GPU. The paper reveals Google's custom chip can handle the inference jobs more than an order of magnitude faster than standard hardware. The TPU speeds up Google's machine learning jobs by a factor of 15 to 30 times, while also delivering performance per Watt that is 30 to 80 times better than what's possible with Intel and NVIDIA hardware.

In benchmarks in 2015 using Google’s machine-learning jobs, the TPU ran 15 to 30 times faster and delivered 30 to 80 times better performance per watt than Intel’s Haswell server CPU and Nvidia’s K80 GPU. “The relative incremental-performance/watt — which was our company’s justification for a custom ASIC — is 41 to 83 for the TPU, which lifts the TPU to 25 to 29 times the performance/watt of the GPU,” the paper reports.Google acknowledges newer CPUs and GPUs will run inference faster but they also point out there's lots of room to improve the TPU as the chip was designed over a relatively short 15-month design schedule and does not include a lot of energy saving features. The paper claims an updated TPU design could be twice or thrice as fast, and boost performance/Watt to a level of nearly 70 times that of the NVIDIA K80 GPU or over 200 times that of the Intel Haswell CPU.

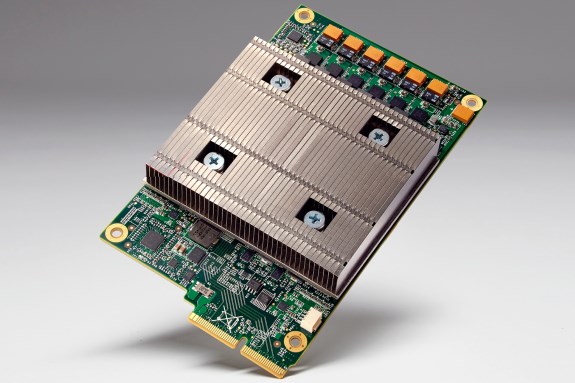

The Google TPU card is pictured below.