Basically, the performance of GPUs advances much more quickly because they rely upon a parallel architecture. The innovation also isn't just about chips, but about the entire stack. As observed by IEEE Spectrum:

Just how fast does GPU technology advance? In his keynote address, Huang pointed out that Nvidia’s GPUs today are 25 times faster than five years ago. If they were advancing according to Moore’s law, he said, they only would have increased their speed by a factor of 10.

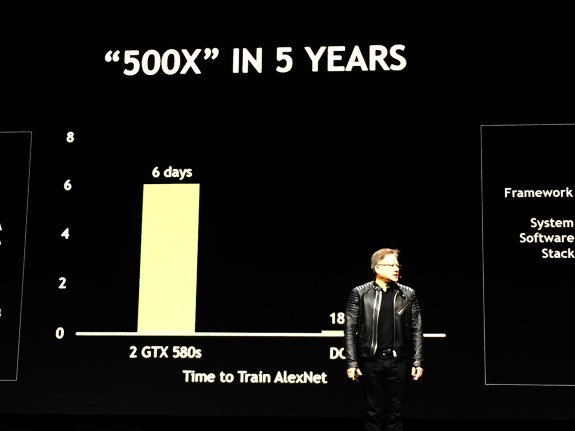

Huang later considered the increasing power of GPUs in terms of another benchmark: the time to train AlexNet, a neural network trained on 15 million images. He said that five years ago, it took AlexNet six days on two of Nvidia’s GTX 580s to go through the training process; with the company’s latest hardware, the DGX-2, it takes 18 minutes—a factor of 500.