At today’s Xilinx Developer Forum in San Jose, Calif., our CEO, Victor Peng was joined by the AMD CTO Mark Papermaster for a Guinness. But not the kind that comes in a pint – the kind that comes in a record book.*

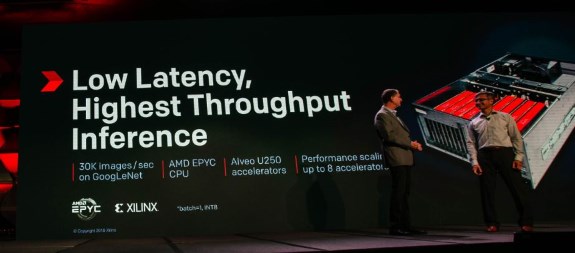

The companies revealed the AMD and Xilinx have been jointly working to connect AMD EPYC CPUs and the new Xilinx Alveo line of acceleration cards for high-performance, real-time AI inference processing. To back it up, they revealed a world-record* 30,000 images per-second inference throughput!

The impressive system, which will be featured in the Alveo ecosystem zone at XDF today, leverages two AMD EPYC 7551 server CPUs with its industry-leading PCIe connectivity, along with eight of the freshly-announced Xilinx Alveo U250 acceleration cards. The inference performance is powered by Xilinx ML Suite, which allows developers to optimize and deploy accelerated inference and supports numerous machine learning frameworks such as TensorFlow. The benchmark was performed on GoogLeNet*, a widely used convolutional neural network.

AMD and Xilinx have shared a common vision around the evolution of computing to heterogeneous system architecture and have a long history of technical collaboration. Both companies have optimized drivers and tuned the performance for interoperability between AMD EPYC CPUs with Xilinx FPGAs. We are also collaborating with others in the industry on cache coherent interconnect for accelerators (the CCIX Consortium – pronounced “see-six”), focused on enabling cache coherency and shared memory across multiple processors.

AMD EPYC is the perfect CPU platform for accelerating artificial intelligence and high- performance computing workloads. With 32 cores, 64 threads, 8 memory channels with up to 2 TB of memory per socket, and 128 PCIe lanes coupled with the industry’s first hardware-embedded x86 server security solution, EPYC is designed to deliver the memory capacity, bandwidth, and processor cores to efficiently run memory-intensive workloads commonly seen with AI and HPC. With EPYC, customers can collect and analyze larger data sets much faster, helping them significantly accelerate complex problems.

Xilinx and AMD see a bright future in their technology collaboration. There is strong alignment in our roadmaps that align the high-performance AMD EPYC server and graphics processors with Xilinx acceleration platforms across its Alveo accelerator cards, as well as its forthcoming Versal portfolio.

So, raise a pint to the future of AI inference and innovation for heterogeneous computing platforms. And don’t forget to stop by and see the system in action in the Alveo ecosystem zone at the Fairmont hotel.

(Full disclosure – this has not yet been verified by Guinness themselves, but we hope to make a trip to Dublin soon!)

*running a batch size of 1 and Int8 precision.

At the same event, Xilinx also revealed Versal, a new 7nm adaptive compute acceleration platform (ACAP) for the AI market. It aims to perform inference jobs several times faster and more efficient than NVIDIA GPUs.